Crucial questions for longtermists – Part 1

This post was written for Convergence Analysis. It introduces a collection of “crucial questions for longtermists”: important questions about the best strategies for improving the long-term future. This collection is intended to serve as an aide to thought and communication, a kind of research agenda, and a kind of structured reading list.

Introduction

The last decade saw substantial growth in the amount of attention, talent, and funding flowing towards existential risk reduction and longtermism. There are many different strategies, risks, organisations, etc. to which these resources could flow. How can we direct these resources in the best way? Why were these resources directed as they were? Are people able to understand and critique the beliefs underlying various views – including their own – regarding how best to put longtermism into practice?

Relatedly, the last decade also saw substantial growth in the amount of research and thought on issues important to longtermist strategies. But this is scattered across a wide array of articles, blogs, books, podcasts, videos, etc. Additionally, these pieces of research and thought often use different terms for similar things, or don’t clearly highlight how particular beliefs, arguments, and questions fit into various bigger pictures. This can make it harder to get up to speed with, form independent views on, and collaboratively sculpt the vast landscape of longtermist research and strategy.

To help address these issues, this post collects, organises, highlights connections between, and links to sources relevant to a large set of the “crucial questions” for longtermists. These are questions whose answers might be “crucial considerations” – that is, considerations which are “likely to cause a major shift of our view of interventions or areas”.

We collect these questions into topics, and then progressively then progressively break “top-level questions” down into the lower-level “sub-questions” that feed into them. For example, the topic “Optimal timing of work and donations” includes the top-level question “How will ‘leverage over the future” change over time?’, which is broken down into (among other things) “How will the neglectedness of longtermist causes change over time?” We also link to Google docs containing many relevant links and notes.

What kind of questions are we including?

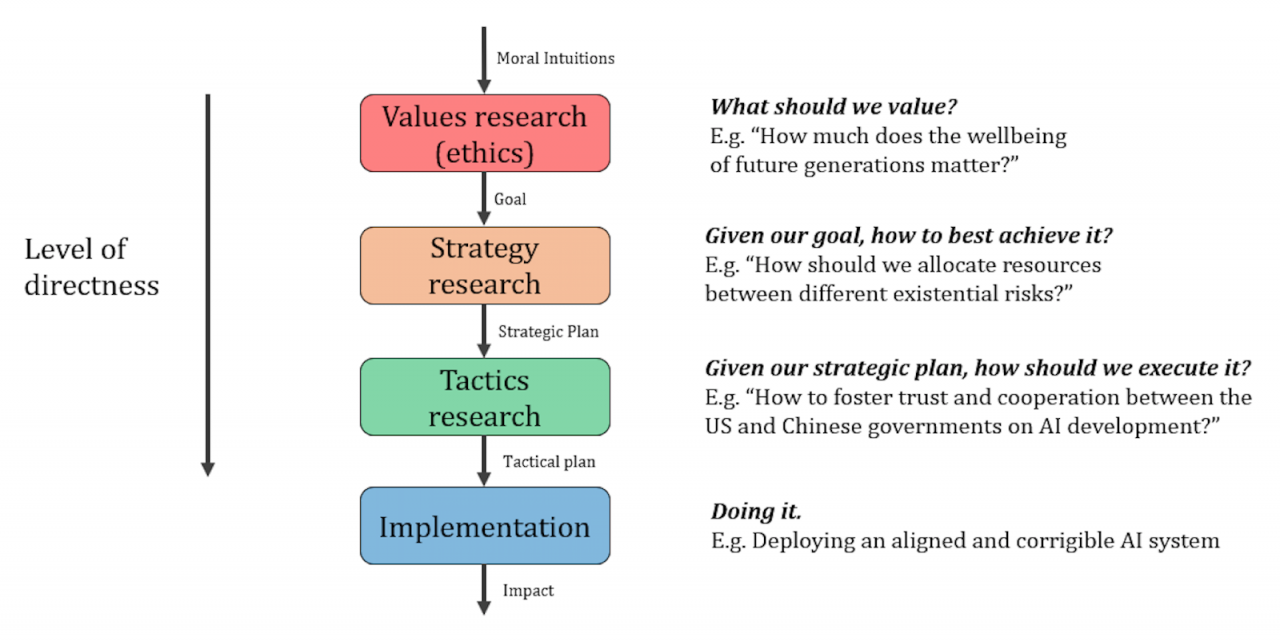

The post A case for strategy research visualised the “research spine of effective altruism” as follows:

This post can be seen as collecting questions relevant to the “strategy” level.

One could imagine a version of this post that “zooms out” to discuss crucial questions on the “values” level, or questions about cause prioritisation as a whole. This might involve more emphasis on questions about, for example, population ethics, the moral status of nonhuman animals, and the effectiveness of currently available global health interventions. But here we instead (a) mostly set questions about morality aside, and (b) take longtermism as a starting assumption.

One could also imagine a version of this post that “zooms in” on one specific topic we provide only a high-level view of, and that discusses that in more detail than we do. This could be considered to be work on “tactics”, or on “strategy” within some narrower domain. An example of something like that is the post Clarifying some key hypotheses in AI alignment. That sort of work is highly valuable, and we’ll provide many links to such work. But the scope of this post itself will be restricted to the relatively high-level questions, to keep the post manageable and avoid readers (or us) losing sight of the forest for the trees.

Finally, we’re mostly focused on:

- Questions about which different longtermists have different beliefs, with those beliefs playing an explicit role in their strategic views and choices

- Questions about which some longtermists think learning more or changing their beliefs would change their strategic views and choices

- Questions which it appears some longtermists haven’t noticed at all, the noticing of which might influence those longtermists’ strategic views and choices

These can be seen as questions that reveal a “double crux” that explains the different strategies of different longtermists. We thus exclude questions about which practically, or by definition, all longtermists agree.

A high-level overview of the crucial questions for longtermists

Here we provide our current collection and structuring of crucial questions for longtermists. The linked Google docs contain some further information and a wide range of links to relevant sources, and I intend to continue adding new links in those docs for the foreseeable future.

“Big picture” questions (i.e., not about specific technologies, risks, or risk factors)

- Value of, and best approaches to, existential risk reduction

- How “good” might the future be, if no existential catastrophe occurs?

- What is the possible scale of the human-influenced future?

- What is the possible duration of the human-influenced future?

- What is the possible quality of the human-influenced future?

- How does the “difficulty” or “cost” of creating pleasure vs. pain compare?

- Can and will we expand into space? In what ways, and to what extent? What are the implications?

- Will we populate colonies with (some) nonhuman animals, e.g. through terraforming?

- Can and will we create sentient digital beings? To what extent? What are the implications?

- Would their experiences matter morally?

- Will some be created accidentally?

- How “bad” would the future be, if an existential catastrophe occurs? How does this differ between different existential catastrophes?

- How likely is future evolution of moral agents or patients on Earth, conditional on (various different types of) existential catastrophe? How valuable would that future be?

- How likely is it that our observable universe contains extraterrestrial intelligence (ETI)? How valuable would a future influenced by them rather than us be?

- How high is total existential risk? How will the risk change over time?

- Where should we be on the “narrow vs. broad” spectrum of approaches to existential risk reduction?

- To what extent will efforts focused on global catastrophic risks, or smaller risks, also help with existential risks?

- How “good” might the future be, if no existential catastrophe occurs?

- Value of, and best approaches to, improving aspects of the future other than whether an existential catastrophe occurs

- What probability distribution over various trajectories of the future should we expect?

- How good have trajectories been in the past?

- How close to the appropriate size should we expect influential agents’ moral circles to be “by default”?

- How much influence should we expect altruism to have on future trajectories “by default”?

- How likely is it that self-interest alone would lead to good trajectories “by default”?

- How does speeding up development affect the expected value of the future?

- How does speeding up development affect existential risk?

- How does speeding up development affect astronomical waste? How much should we care?

- With each year that passes without us taking certain actions (e.g., beginning to colonise space), what amount or fraction of resources do we lose the ability to ever use?

- How morally important is losing the ability to ever use that amount or fraction of resources?

- How does speeding up development affect other aspects of our ultimate trajectory?

- What are the best actions for speeding up development? How good are they?

- Other than speeding up development, what are the best actions for improving aspects of the future other than whether an existential catastrophe occurs? How valuable are those actions?

- How valuable are various types of moral advocacy? What are the best actions for that?

- How “clueless” are we?

- Should we find claims of convergence between effectiveness for near-term goals and effectiveness for improving aspects of the future other than whether an existential catastrophe occurs “suspicious”? If so, how suspicious?

- What probability distribution over various trajectories of the future should we expect?

- Value of, and best approaches to, work related to “other”, unnoticed, and/or unforeseen risks, interventions, causes, etc.

- What are some plausibly important risks, interventions, causes, etc. that aren’t mentioned in the other “crucial questions”? How should the answer change our strategies (if at all)?

- How likely is it that there are important unnoticed and/or unforeseen risks, interventions, causes, etc.? What should we do about that?

- How often have we discovered new risks, interventions, causes, etc. in the past? How is that rate changing over time? What can be inferred from that?

- How valuable is “horizon-scanning”? What are the best approaches to that?

- Optimal timing for work/donations

- How will “leverage over the future” change over time?

- What should be our prior regarding how leverage over the future will change? What does the “outside view” say?

- How will our knowledge about what we should do change over time?

- How will the neglectedness of longtermist causes change over time?

- What “windows of opportunity” might there be? When might those windows open and close? How important are they?

- Are we biased towards thinking the leverage over the future is currently unusually high? If so, how biased?

- How often have people been wrong about such things in the past?

- If leverage over the future is higher at a later time, would longtermists notice?

- How effectively can we “punt to the future”?

- What would be the long-term growth rate of financial investments?

- What would be the long-term rate of expropriation of financial investments? How does this vary as investments grow larger?

- What would be the long-term “growth rate” from other punting activities?

- Would the people we’d be punting to act in ways we’d endorse?

- Which “direct” actions might have compounding positive impacts?

- Do marginal returns to “direct work” done within a given time period diminish? If so, how steeply?

- How will “leverage over the future” change over time?

- Tractability of, and best approaches to, estimating, forecasting, and investigating future developments

- How good are people at forecasting future developments in general?

- How good are people at forecasting impacts of technologies?

- How often do people over- vs. underestimate risks from new tech? Should we think we might be doing that?

- How good are people at forecasting impacts of technologies?

- What are the best methods for forecasting future developments?

- How good are people at forecasting future developments in general?

- Value of, and best approaches to, communication and movement-building

- When should we be concerned about information hazards? How concerned? How should we respond?

- When should we have other concerns or reasons for caution about communication? How should we respond?

- What are the pros and cons of expanding longtermism-relevant movements in various ways?

- What are the pros and cons of people who lack highly relevant skills being included in longtermism-relevant movements?

- What are the pros and cons of people who don’t work full-time on relevant issues being included in longtermism-relevant movements?

- Comparative advantage of longtermists

- How much impact should we expect longtermists to be able to have as a result of being more competent than non-longtermists? How does this vary between different areas, career paths, etc.?

- Generally speaking, how competent, “sane”, “wise”, etc. are existing society, elites, “experts”, etc?

- How much impact should we expect longtermists to be able to have as a result of having “better values/goals” than non-longtermists? How does this vary between different areas, career paths, etc.?

- Generally speaking, how aligned with “good values/goals” (rather than with worse values, local incentives, etc.) are the actions of existing society, elites, “experts”, etc.?

- How much impact should we expect longtermists to be able to have as a result of being more competent than non-longtermists? How does this vary between different areas, career paths, etc.?

Like Our Story ? Donate to Support Us, Click Here

You want to share a story with us? Do you want to advertise with us? Do you need publicity/live coverage for product, service, or event? Contact us on WhatsApp +16477721660 or email Adebaconnector@gmail.com